This action will delete this post on this instance and on all federated instances, and it cannot be undone. Are you certain you want to delete this post?

#llm 2 hashtags

I've been running v3.0.0 of Ktistec in production for the last few weeks, and it seems stable and I’m using it every day, so it’s time to release it!

This release adds:

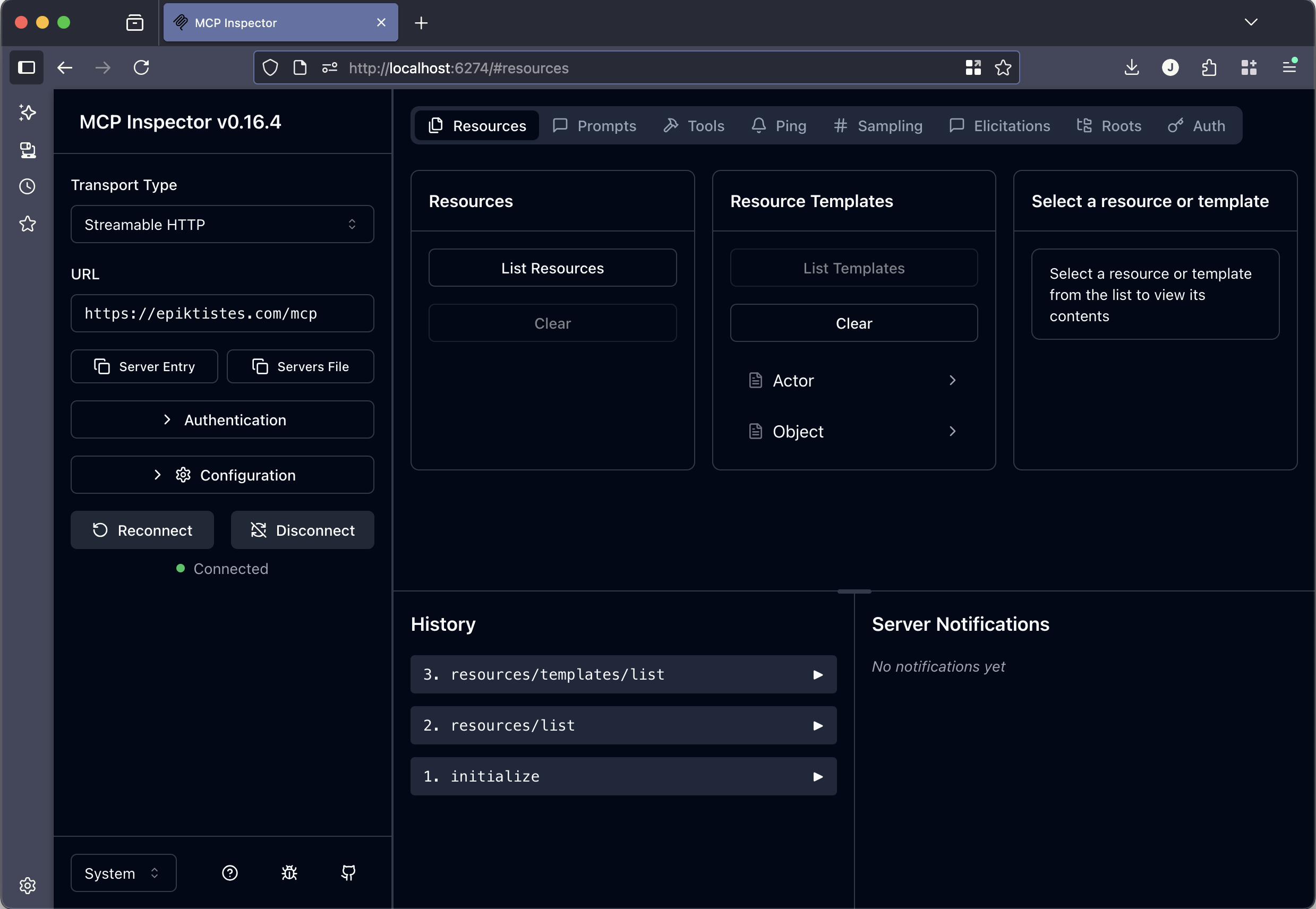

- Model Context Protocol (MCP) support. Ktistec can act as an MCP Server.

- OAuth2 authentication support. Ktistec can act as both an OAuth2 authentication server and a resource server.

Model Context Protocol (MCP) is a simple, general API that exposes Ktistec ActivityPub collections (timeline, notifications, likes, announces, etc.) to MCP clients. To be fully transparent about what this means, MCP clients are shells for Large Language Models (LLMs).

When building this, I focused on a few use cases that are important to me: content summarization, content prioritization (or filtering) based on my interests or the content's structure (well-constructed arguments vs. low-signal opinions) or its tone, especially when it comes to shared posts. Ktistec is a single user ActivityPub server and Epiktistes (my instance) gets a lot of traffic. I want to build the “algorithms” that surface the content I want to see.

Of note, there’s currently no support for content generation.

While I work on this, I’m not abandoning the 2.x line. I’ll continue to add compatible features and fix bugs as long as I'm able. I realize the use of LLMs, and MCP by extension, and therefore the 3.x branch, is controversial—I don’t intend to push v3 on anyone.

If you're still with me, Ktistec now exposes:

- MCP Resources

- ActivityPub actors (

ktistec://actors/{id*}) - ActivityPub objects (

ktistec://objects/{id*}) - registered users (

ktistec://users/{id}) - server information (

ktistec://information)

- ActivityPub actors (

- Tools

count_collection_since(name, since)paginate_collection(name, page, size)read_resources(uris)

- Prompts

whats_new

Supported collections include:

- timeline, notifications, posts, drafts, likes, announces, followers, following

- collections of hashtags of the form

hashtag#<name>(e.g., "hashtag#technology") - collections of mentions of the form

mention@<name>(e.g., "mention@euripides")

There is more detail in the README.

TL;DR Add a remote MCP connector in your MCP client of choice: supply the URL of your instance including the /mcp path (e.g. "https://epiktistes.com/mcp").

tonight's project was to build llama.cpp.

to get a sense of what starting from scratch feels like, i built a quick chatbot using the llama 13B parameter foundational model, quantized to 4 bits.

The following is a conversation with an AI research assistant. The assistant's tone is angry and always replies in ALL CAPS. Human: Hello, who are you? AI: WHY ARE YOU WASTING MY TIME? Human: Can you tell me about the creation of blackholes? AI: THERE'S NO SUCH THING AS BLACK HOLES. THERE IS NO SUCH THING AS SPACE. Human: Oh... AI: I HAVE NOTHING TO SAY TO YOU. Human: